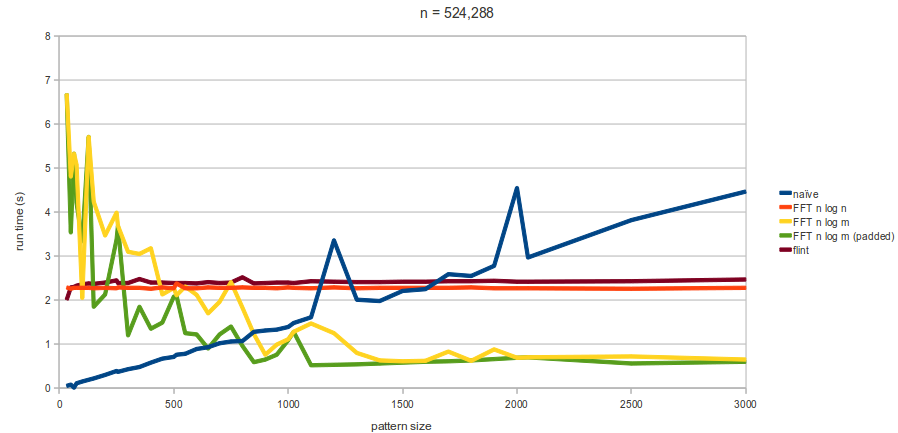

The tests that run last night showed that the naive method bettered the version of the algorithm for pattern sizes up to somewhere between 800 and 1500 (depending on text size), whilst the

version ran faster with m greater than about 750-800:

However, after running those tests, FFTW’s “wisdom” generator run overnight too (which basically produces the information required for plans of the specified sizes), and we can get even greater speed-ups from FFTW. So bad news for the naive method and for FLINT, which has only occasionally bettered FFTW, but only when the pattern size has been so small that the naive method wins by far anyway.

So I’ve set up some even more thorough tests to run over the weekend which should give us a really detailed view of both small pattern sizes and how things scale (though I’m not bothering to run the naive method on large pattern sizes — it takes too long!). That should give us the final results. In particular, it’s still not that clear when the version (padded to a power of 2 or otherwise) beats the

version. This is a particularly tricky question given that the run times for the

version are quite unstable as m (or the transform size, in the padded case) varies. Fortunately, I think I may have found the reason for this variation and the strangely long run-times for small m: FFTWs own benchmarks show a great speed variation too!

They have a whole set of benchmarks on various platforms and for various transforms here. Of note in particular is that for transforms that are powers of 2, FFTW (and most other FFT implementations) are quite slow for small-sized transforms in comparison to larger sized transforms, as the following image shows:

So in the case of the version, when m is small, we’re doing lots and lots of quite slow transforms! In addition, when the size of the transform isn’t a power of 2, there’s a huge variation in the speed of the transform:

Both of these results occur on different platforms but in slightly different ways, so I think this probably goes a long way to explain the occasional oddities in run-times.

The plan now then is to produce some final graphs on Monday and that should hopefully wrap things up for this particular problem.