I’ve been meaning to get around to benchmarking a couple of implementations that solve the “matching with don’t cares” problem by using some randomisation. This reduces the number of convolutions we need at the sacrifice of the small chance of a false-positive. More specifically, we can reduce the number of convolutions from 3 to 2, or even 1 if we consider the case where wild-cards are only allowed in the pattern.

The implementation allowing wild-cards in either follows straight from Adam Kalai’s paper; the method only allowing wild-cards in the pattern is a little different though follows the same broad idea. If I get time I guess I should probably implement Kalai’s method for this for completeness.

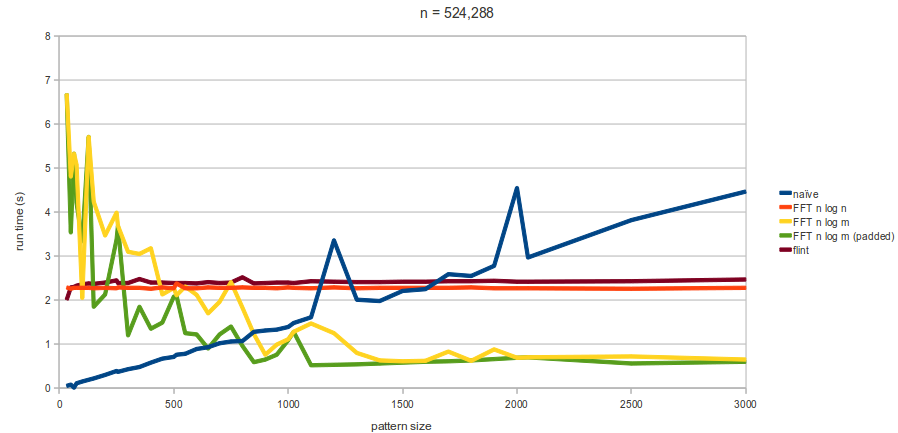

Anywho, onto the results which are quite pleasing:

- In both cases, the point at which the algorithms become faster than the naive method is somewhere between a half and two thirds of the deterministic equivalent. This follows nicely from the reduction in the number of convolutions.

- With wild cards in the text and pattern, this is a reduction from around 80 to 45-50.

- With no wild cards in the text, we get a reduction from about 50 to 25.

You can see some graphs of this either in SVG format or in PNG format.

These results were repeats for a few different text sizes in the range of 1-4 million; I might repeat it overnight with a larger text size but I don’t expect to see any difference. You can read more about the testing and results over at the page on StringPedia.